I got OpenAI o1 to play codenames and results were suprisingly good.

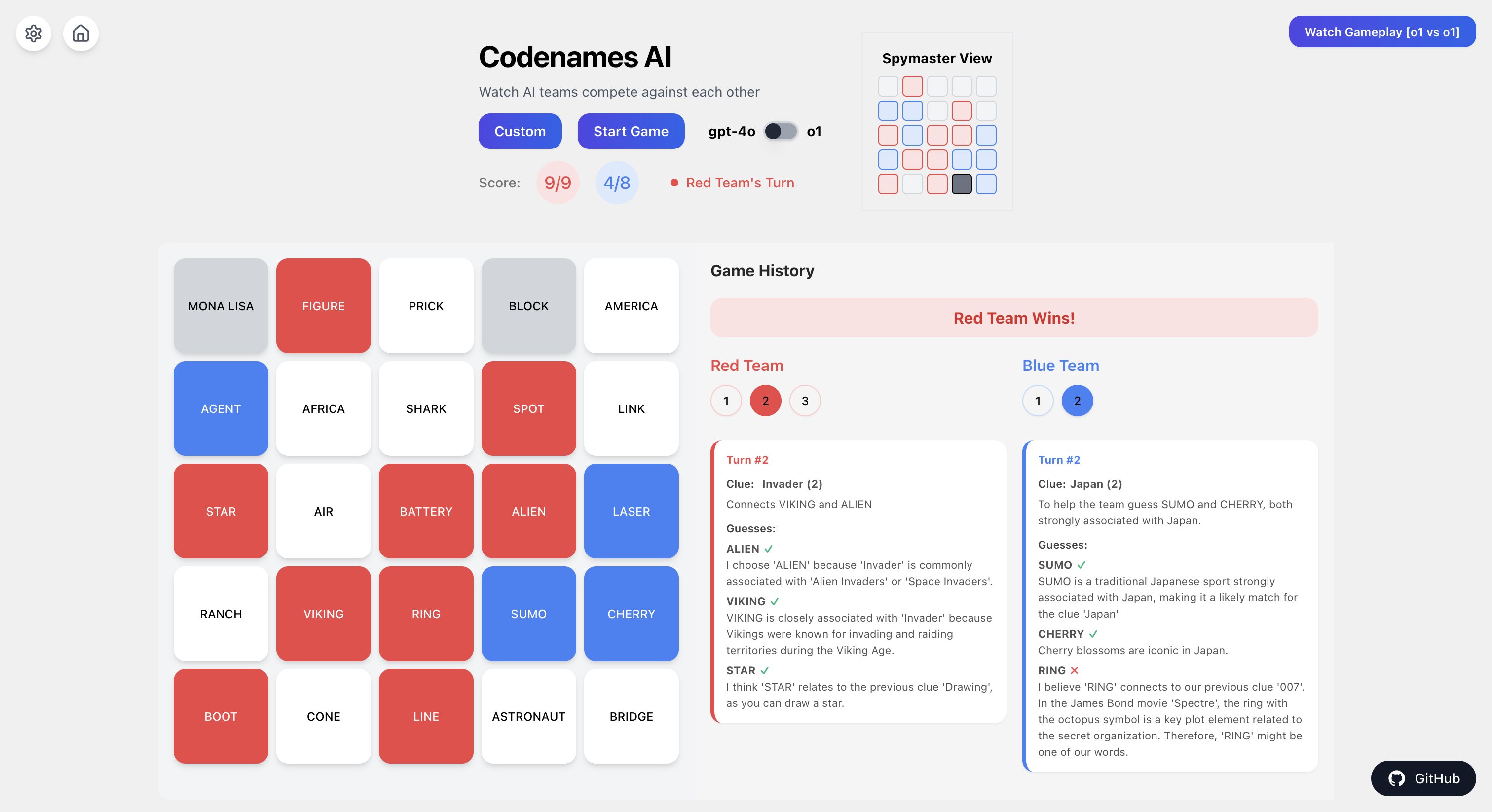

[o1 playing against o1]

Links: Github RepoMain website

I got two teams of OpenAI's o1 models to play the boardgame, Codenames, and they didn't disappoint.

Over 20 games were played with o1, with each clue giver and guesser carefully reasoning out the best possible clues and guesses. Their performance was consistently impressive.

PS: The blog post got featured on Hacker News ranking #7 at its peak. There were a lot of interesting comments, have a look here.

Some of the interesting observations.

1. o1 is definietely more knowledgeable than the average human.

Winning at Codenames often comes down to how well the clue giver and guesser share a strong general knowledge. I remember a time when a friend gave the clue "Sputnik" to link the words "Russia" and "Satellite". Unfortunately for him, none of the guessers had any idea what Sputnik was, and the clue fell flat.

In the games o1 played againse o1, in multiple times, I saw instances where o1's general knowledge came to help. Here's two such instances. Look at the reasonings!

2. It gave "007" as a clue.

Anyone familiar with the James Bond series knows that 007 is the code name for the famous secret agent. My challenge in this scenario was figuring out if this was a valid clue. I had not included the game rules in the prompt, so my first assumption was o1 did something that's against the rules.

I read through codenames official rules to see if using "007" as a clue was allowed, and it turns out it is! To my surprise, I even came across a Reddit post where people were discussing and justifying why this clue fits perfectly within the rules.

3. It connected MAIL, LAWYER, LINE and LOG with a single word.

OpenAI o1 nailed it by linking "Mail", "Lawyer", "Line", and "Log" with just the clue "Paper". Look at the reasonings!

It's impressive because even a regular player might not think to tie all these words together so seamlessly. After all, a clue is only successful if the guessers can connect all the words, and o1 guesser did perfectly.

You can get AI to play your own games at codenames.suveenellawela.com