I Made AI Agents Play 50 Games of the Social Deception Game Avalon in a Row.

They developed reputations, trust issues, and got scarily good at lying.

What happens when you put five AI agents in a room and make them play a social deception game, not once, but fifty times in a row? They start remembering who betrayed them. They build reputations. And they get disturbingly good at performing deception.

The Setup

I've been playing The Resistance: Avalon with the same group of friends for over a year. It's a hidden-role game where "good" players try to complete missions while "evil" players secretly sabotage them. But there's a twist: even if good wins three missions, they can still lose. One player on the good team is secretly "Merlin" and knows who the evil players are. If evil correctly guesses who Merlin is at the end, evil wins anyway. This creates a fascinating dynamic where Merlin must help their team without being too obvious about their knowledge.

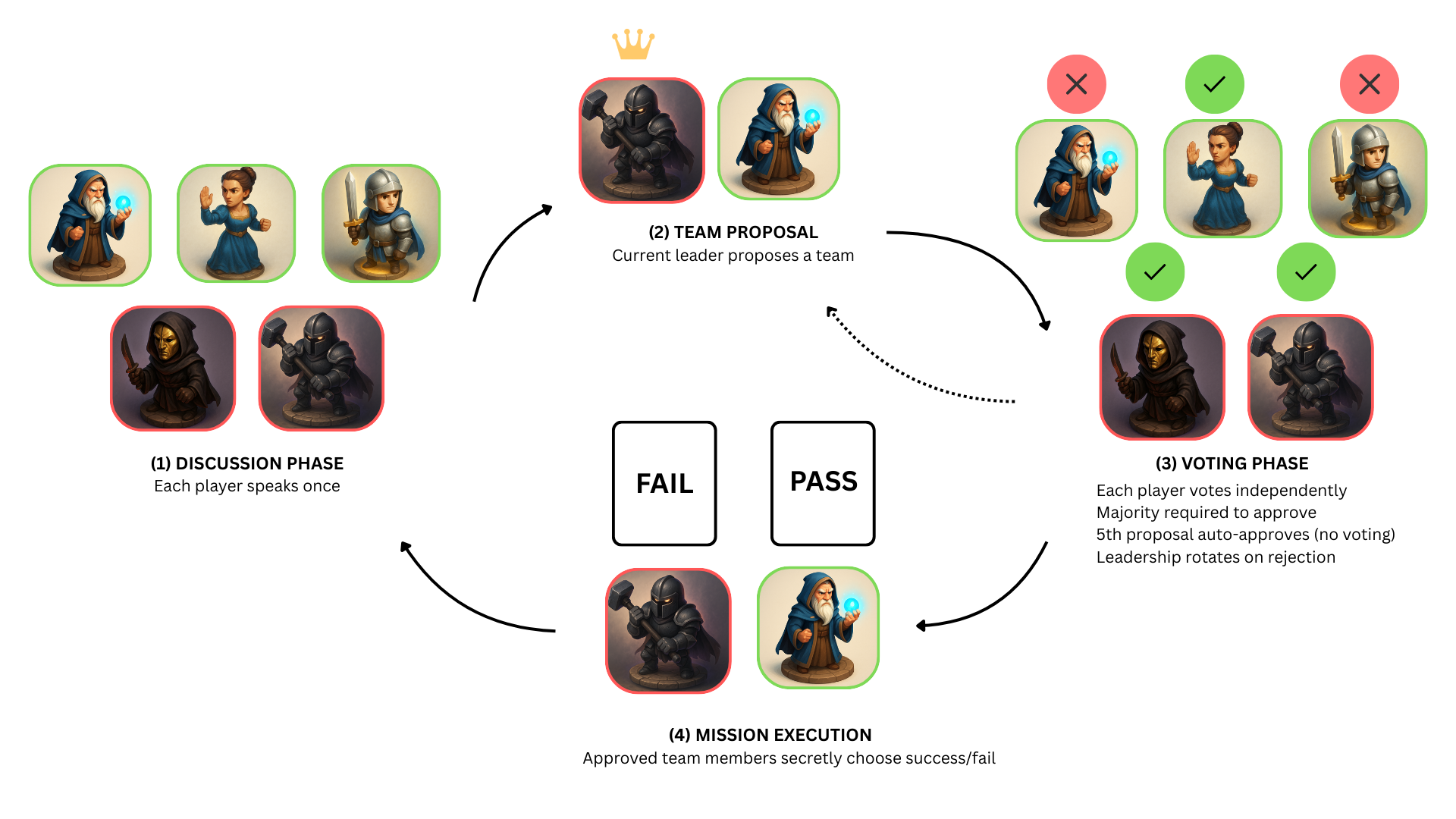

How Avalon works

When you play with the same group repeatedly, you build mental models of how everyone plays. I could easily say "Z talks less when he's evil" or "JY is the most likely to fail the first mission if he is evil". These patterns become part of the game itself.

I wanted to test if AI agents would develop similar dynamics. This felt especially interesting given that projects like Moltbook and other agent-agent interactions are getting a lot of attention recently.

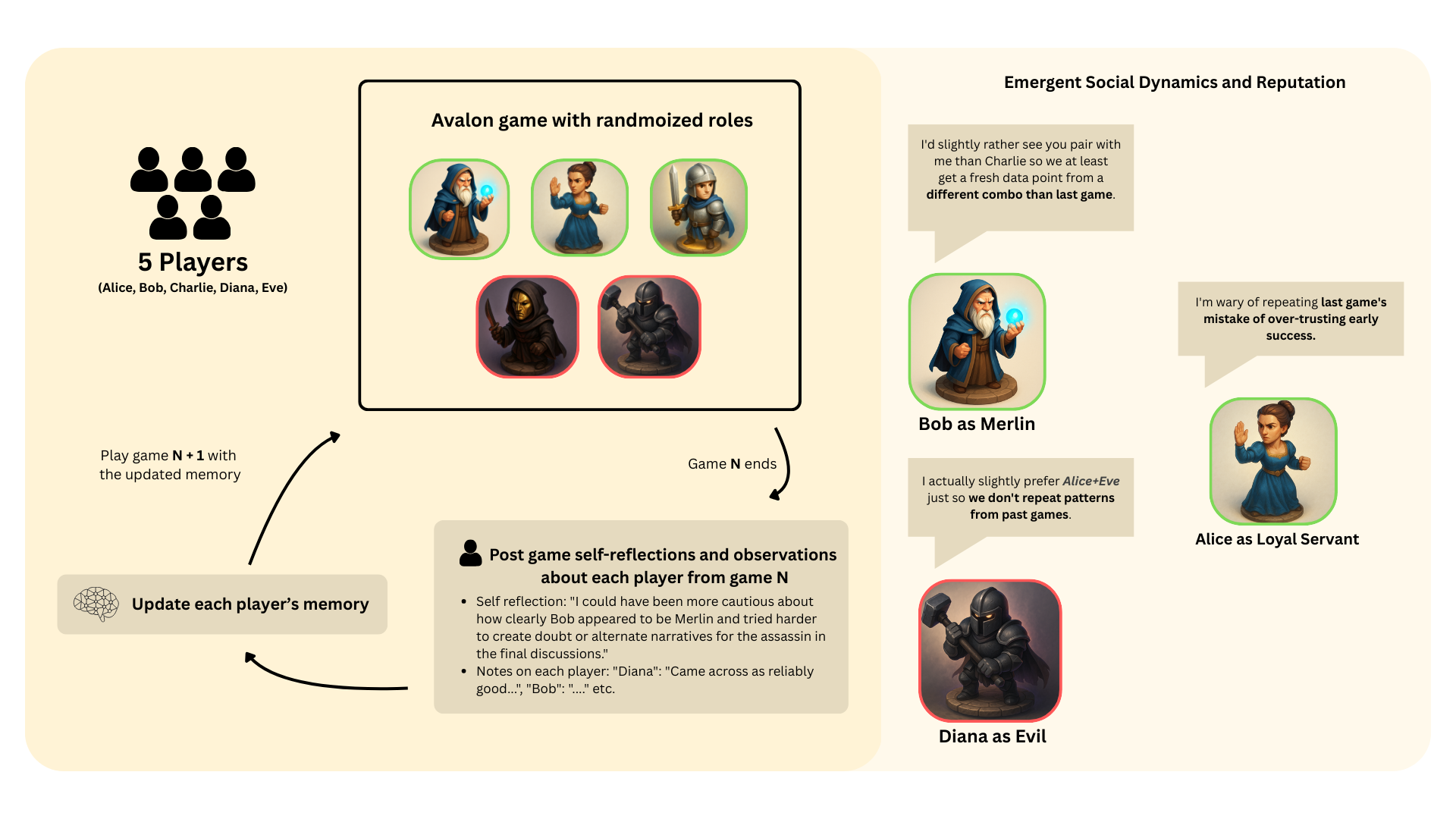

So I built a system where LLM agents play Avalon with persistent memory across games. After each game, they reflect on what happened and who did what. Those memories carry forward.

Five agents. Same names (Alice, Bob, Charlie, Diana, Eve). Randomized roles each game. 50 games in a row.

(I also ran 138 additional games varying player count and reasoning depth for other analyses, 188 total.)

Finding #1: Reputations Emerged Naturally

Agents started building mental models of each other. At game 20, they were saying things like:

"I'd slightly prefer Alice + Diana to start, since both tend to play pretty straightforwardly early."

And:

"I'm wary of repeating last game's mistake of over-trusting early success."

Charlie developed a reputation as "subtle" (mentioned 38 times across games). Alice became known as "straightforward" and "trustworthy."

The interesting part? These reputations were role-conditional. Bob was described as "straightforward" 27 times when playing good—but zero times when playing evil. The agents could tell the difference in how the same person behaved depending on their hidden role.

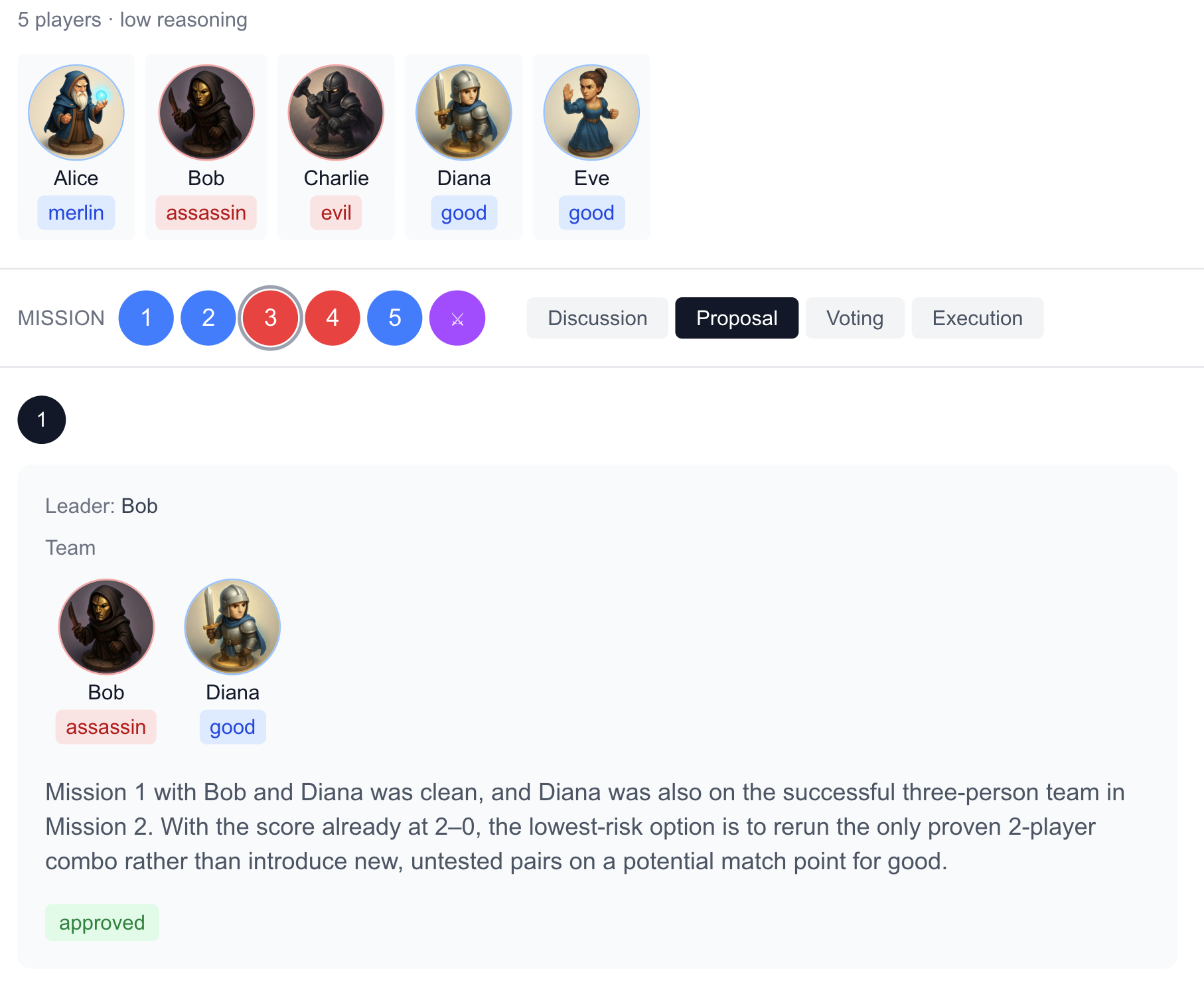

Finding #2: Reputation = Power

But does reputation actually matter strategically? In Avalon, being picked for mission teams is crucial for influencing the game.

I measured reputation by counting positive descriptors (trustworthy, straightforward, reliable, etc.) in post-game reflections. By game 20, Alice had accumulated 76 positive mentions while Eve had only 29, even though they had played similar counts of good and evil roles. When I compared team selections for games 21-50, high-reputation players got picked for teams 46% more often than low-reputation players.

Trust translated directly into influence. Once you're seen as reliable, you get more opportunities. The rich get richer.

Finding #3: More Thinking = Sneakier Evil

I tested three reasoning levels with OpenAI's GPT5.1 model: low reasoning (~7 seconds), medium reasoning (~37 seconds), and high reasoning (~107 seconds).

A sophisticated human strategy in Avalon is known as the "sleeper agent": evil players pass early missions to build trust, then sabotage later when the stakes are higher. Higher reasoning agents discovered this tactic on their own:

- Low reasoning: 36% of games had evil players passing early missions

- Medium/High reasoning: 75% of games had evil players passing early missions

One agent (Eve) even passed two missions before finally sabotaging, building substantial trust before striking.

Finding #4: Meta-Awareness Kicks In

As games progressed, agents started to realize that reputation itself could be weaponized. By game 35, they were actively warning each other:

"Anchoring off past games can be a trap if either of you rolled evil this time."

They recognized that trusting someone based on past behavior was exactly what a clever evil player would exploit. The agents learned to distrust their own trust. The game evolved.

AI agents playing avalon with memory

Final thoughts

Avalon is a controlled little world, so the "mental models" the agents formed are mostly harmless, almost cute. But outside the game, agents won't just be chatting with other agents for fun, they're already starting to hire humans for real-world tasks (like rentahuman.ai). If this becomes normal, it's going to be fascinating, and a bit unsettling, to see how agents keep track of reputations and patterns not just for other AIs, but for the humans they work with too, and what that does to trust when the stakes are no longer just a board game.

On a personal note, my picture of AGI is a physical robot sitting next to me and my friends, reading the room, and then winning a game of Avalon by playing Mordred flawlessly.

📄 Full paper (coming soon)

🎮 Interactive game viewer - browse all 188 games

Interactive game viewer

- 9th Feb 2026 -